Research and Projects

Research

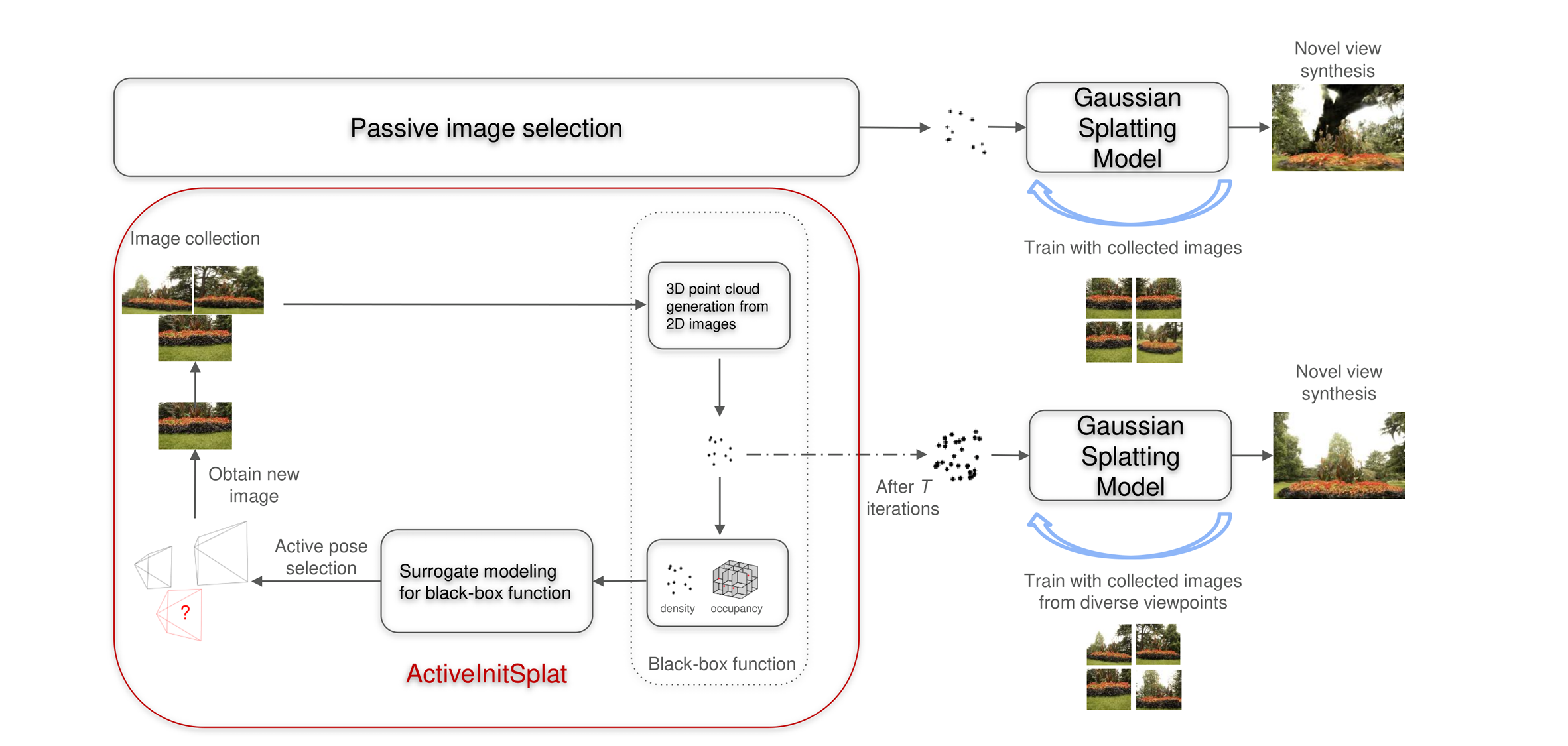

ActiveInitSplat | Gaussian Splatting with active image selection

Graduate Student Researcher under professor Tara Javidi [Paper]

• Developed a novel active image selection framework for Gaussian Splatting (3DGS), leveraging density and occupancy based criteria using blackbox optimization to ensure diverse viewpoint coverage.

• Achieved significant performance improvements in real-time 3D scene rendering, surpassing passive selection baselines, by achieving increased LPIPS, SSIM, and PSNR metrics by almost 5% with fewer training images

Multi-Object Tracking

Graduate Student Researcher under professor Nikolay Atanasov

• Engineered an advanced KF-based multi-object tracking (MOT) system, leveraging probabilistic data association(PDAKF) for superior tracking accuracy, increasing HOTA and MOTA metrics by almost 10%.

• Integrating ReID features(Neural Features) into the tracking pipeline, inspired by StrongSORT, to improve robustness in real-time tracking under occlusions and cluttered scenes.

VLM-Based Semantic Odometry

Graduate Student Researcher under professor Nikolay Atanasov

• Developed an end-to-end(E2E) odometry pipeline using foundation models (TinyCLIP) to extract semantic-spatial embeddings from RGB images, fused with FastSAM masks for precise localization on NVIDIA Jetson Nano

• Fine-tuned the VLM on domain-specific race track data (e.g., cones, barriers) and optimized inference via TensorRT, achieving 20% higher accuracy than geometric baselines (FPFH) at 10Hz

Techincal Projects

Text-to-3D Mesh Generation

CSE 252D: Advanced Computer Vision[Report]

Enhanced the Gaussian Dreamer framework for Text-to-3D with stable diffusion Foundation Model for better 2D diffusion replacing Stable diffusion with MV Dream and Score Distillation Sampling to Variational Score Distillation for improved loss.

Employed SuGaR for efficient mesh extraction, achieving faster generation times and superior model quality across views.

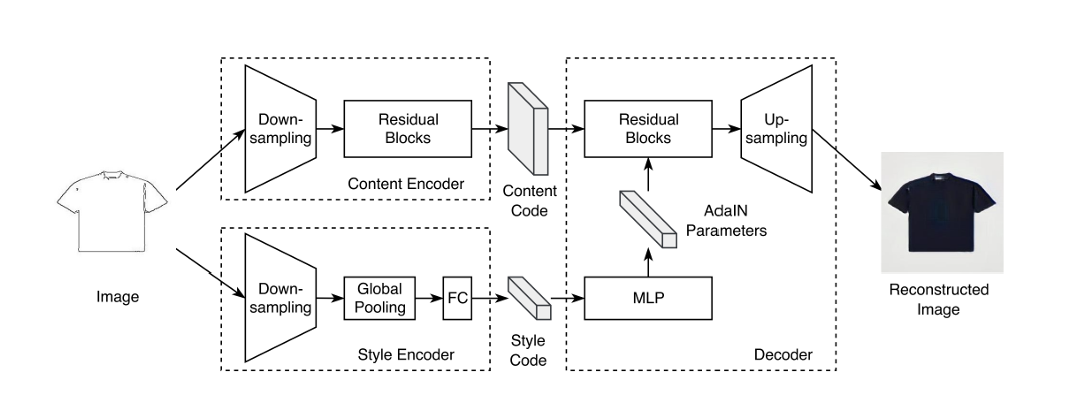

Multimodal Edge-to-RGB Image Translation

ECE 285: Intro to Visual Learning [Report]

Designed an encoder-decoder architecture using cVAE and GAN to convert edge images into realistic RGB images, enhancing scene interpretation.

Improved output diversity and realism by incorporating latent space sampling and multimodal learning, achieving high-quality image generation with better adaptability in dynamic environments

Designing Roomba prototype

• Developed an integrated real-time motion planning and navigation system for an autonomous robot (Roomba) using the Qualcomm RB5 platform, incorporating a LiDAR and camera for environmental sensing.

• Designed and implemented path planning algorithms (A*, RRT) and integrated SLAM techniques (EKF, ICP) for precise localization and mapping, with real-time Pose graph optimization and Loop closure constraints

Robot Arm Simulation and Perception

ECE 276C: Robot Manipulation and Control[Report]

• Simulated a Franka Panda 7-DOF robotic arm on an omnidirectional mobile base in PyBullet, integrating an end-effector mounted camera for real-time object tracking, motion estimation, and visual feedback control.

• Developed an enhanced RRT* algorithm for mobile base path planning while maintaining manipulator reachability and designed a combined motion planning for efficient reaching behaviors.

6D Object Pose Estimation

Developed an end-to-end pipeline for estimating the 6D pose of objects from RGBD input using PointNet architecture, and using Roboflow for data annotation

Applied advanced point cloud processing techniques such as Farthest Point Sampling to refine object pose accuracy, achieving 80% accuracy within 5° error.

Application of DL approaches for Sinogram-based detections

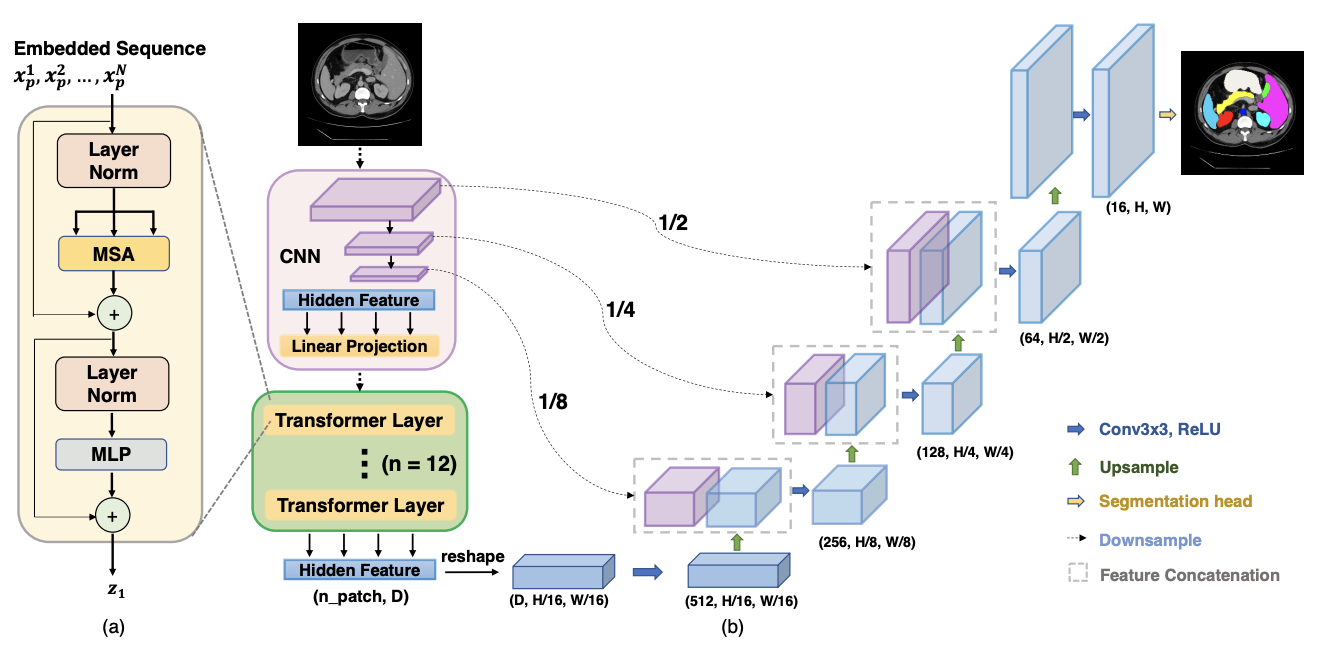

Implemented TranUnet architecture to automatically generate windowed sinograms from raw sinogram data, enhancing image quality and diagnostic accuracy in medical imaging applications.

Conducted a comparative analysis of windowed sinogram outputs derived from various TranUnet configurations, discerning nuanced differences in performance based on the number of transformers utilized.

Computing framework for Autonomous Vehicles

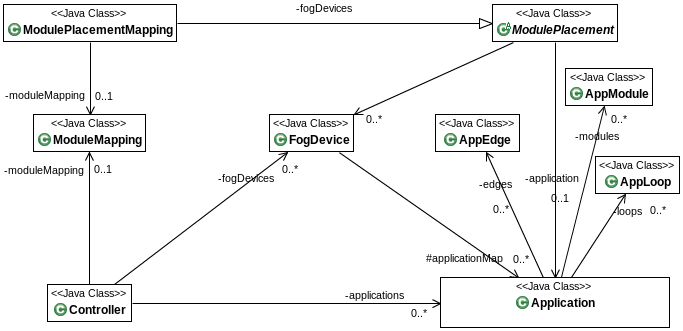

Presented in IEEE ROBIO-2023 [PDF]

Aimed to emphasize and examine the outcomes of computer simulation(mainly energy consumption and data transmission) using fog nodes and iFogsim as a framework in a dynamic bandwidth environment.

Established groundwork for SDN for optimization and interchanging between star and mesh topology as needed.